Hello Learners…

Welcome to the blog…

Table Of Contents

- Introduction

- What is Devika AI?

- AI Development Insights: Devika Agentic AI Software Engineer

- Devika AI Key Features

- Devika AI System Architecture

- Language Models Used In Devika AI

- Browser View Of Devika AI

- Quick Start With Devika AI

- Summary

- References

Introduction

In this post we discuss about AI Development Insights: Devika Agentic AI Software Engineer.

What is Devika AI?

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective.

It utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software.

Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance.

Whether we need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

AI Development Insights: Devika Agentic AI Software Engineer

Devika AI Key Features

- Supports Claude 3, GPT-4, GPT-3.5, and Local LLMs via Ollama. For optimal performance: Use the Claude 3 family of models.

- Advanced AI planning and reasoning capabilities

- Contextual keyword extraction for focused research

- Seamless web browsing and information gathering

- Code writing in multiple programming languages

- Dynamic agent state tracking and visualization

- Natural language interaction via chat interface

- Project-based organization and management

- Extensible architecture for adding new features and integrations

Devika AI System Architecture

Key Components Of Devika AI

At a high level, Devika consists of the following key components:

- Agent Core: Orchestrates the overall AI planning, reasoning and execution process. Communicates with various sub-agents.

- Agents: Specialized sub-agents that handle specific tasks like planning, research, coding, patching, reporting etc.

- Language Models: Leverages large language models (LLMs) like Claude, GPT-4, GPT-3 for natural language understanding and generation.

- Browser Interaction: Enables web browsing, information gathering, and interaction with web elements.

- Project Management: Handles organization and persistence of project-related data.

- Agent State Management: Tracks and persists the dynamic state of the AI agent across interactions.

- Services: Integrations with external services like GitHub, Netlify for enhanced capabilities.

- Utilities: Supporting modules for configuration, logging, vector search, PDF generation etc.

Let’s dive into each of these components in more detail.

Agent Core Of Devika AI

The Agent class serves as the central engine that drives Devika’s AI planning and execution loop. Here’s how it works:

- When a user provides a high-level prompt, the

executemethod is invoked on the Agent. - The prompt is first passed to the Planner agent to generate a step-by-step plan.

- The Researcher agent then takes this plan and extracts relevant search queries and context.

- The Agent performs web searches using Bing Search API and crawls the top results.

- The raw crawled content is passed through the Formatter agent to extract clean, relevant information.

- This researched context, along with the step-by-step plan, is fed to the Coder agent to generate code.

- The generated code is saved to the project directory on disk.

- If the user interacts further with a follow-up prompt, the

subsequent_executemethod is invoked. - The Action agent determines the appropriate action to take based on the user’s message (run code, deploy, write tests, add feature, fix bug, write report etc.)

- The corresponding specialized agent is invoked to perform the action (Runner, Feature, Patcher, Reporter).

- Results are communicated back to the user and the project files are updated.

Throughout this process, the Agent Core is responsible for:

- Managing conversation history and project-specific context

- Updating agent state and internal monologue

- Accumulating context keywords across agent prompts

- Emulating the “thinking” process of the AI through timed agent state updates

- Handling special commands through the Decision agent (e.g. git clone, browser interaction session)

Agents

Devika’s cognitive abilities are powered by a collection of specialized sub-agents.

Each agent is implemented as a separate Python class. Agents communicate with the underlying LLMs through prompt templates defined in Jinja2 format.

Key agents include:

- Planner

- Generates a high-level step-by-step plan based on the user’s prompt

- Extracts focus area and provides a summary

- Uses few-shot prompting to provide examples of the expected response format

- Researcher

- Takes the generated plan and extracts relevant search queries

- Ranks and filters queries based on relevance and specificity

- Prompts the user for additional context if required

- Aims to maximize information gain while minimizing number of searches

- Coder

- Generates code based on the step-by-step plan and researched context

- Segments code into appropriate files and directories

- Includes informative comments and documentation

- Handles a variety of languages and frameworks

- Validates code syntax and style

- Action

- Determines the appropriate action to take based on the user’s follow-up prompt

- Maps user intent to a specific action keyword (run, test, deploy, fix, implement, report)

- Provides a human-like confirmation of the action to the user

- Runner

- Executes the written code in a sandboxed environment

- Handles different OS environments (Mac, Linux, Windows)

- Streams command output to user in real-time

- Gracefully handles errors and exceptions

- Feature

- Implements a new feature based on user’s specification

- Modifies existing project files while maintaining code structure and style

- Performs incremental testing to verify feature is working as expected

- Patcher

- Debugs and fixes issues based on user’s description or error message

- Analyzes existing code to identify potential root causes

- Suggests and implements fix, with explanation of the changes made

- Reporter

- Generates a comprehensive report summarizing the project

- Includes high-level overview, technical design, setup instructions, API docs etc.

- Formats report in a clean, readable structure with table of contents

- Exports report as a PDF document

- Decision

- Handles special command-like instructions that don’t fit other agents

- Maps commands to specific functions (git clone, browser interaction etc.)

- Executes the corresponding function with provided arguments

Each agent follows a common pattern:

- Prepare a prompt by rendering the Jinja2 template with current context

- Query the LLM to get a response based on the prompt

- Validate and parse the LLM’s response to extract structured output

- Perform any additional processing or side-effects (e.g. save to disk)

- Return the result to the Agent Core for further action

Agents aim to be stateless and idempotent where possible. State and history is managed by the Agent Core and passed into the agents as needed. This allows for a modular, composable design.

Language Models Used In Devika AI

Devika’s natural language processing capabilities are driven by state-of-the-art LLMs. The LLM class provides a unified interface to interact with different language models:

- Claude (Anthropic): Claude models like claude-v1.3, claude-instant-v1.0 etc.

- GPT-4/GPT-3 (OpenAI): Models like gpt-4, gpt-3.5-turbo etc.

- Self-hosted models (via Ollama): Allows using open-source models in a self-hosted environment

The LLM class abstracts out the specifics of each provider’s API, allowing agents to interact with the models in a consistent way.

It supports:

- Listing available models

- Generating completions based on a prompt

- Tracking and accumulating token usage over time

Choosing the right model for a given use case depends on factors like desired quality, speed, cost etc. The modular design allows swapping out models easily.

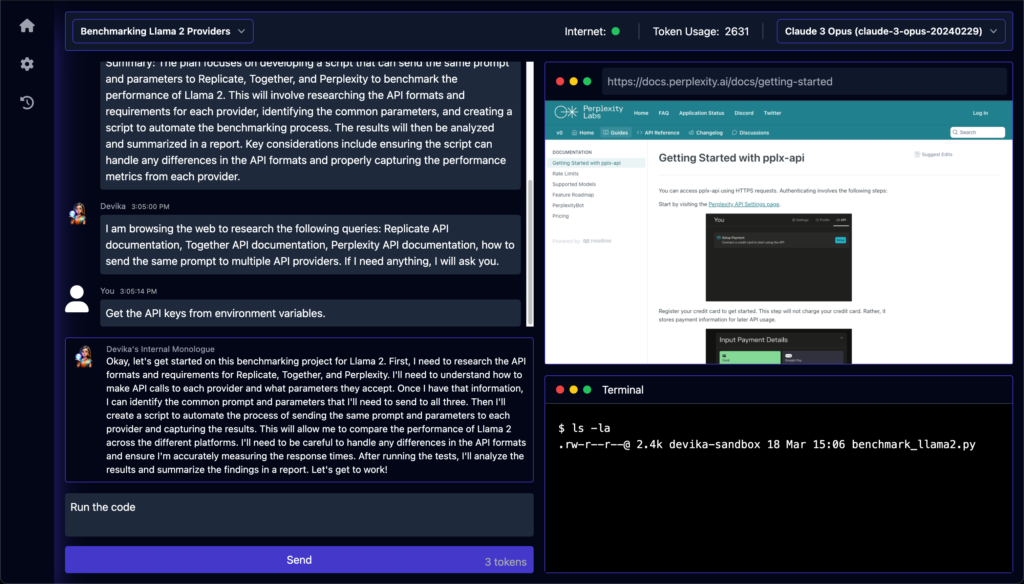

Browser View Of Devika AI

Quick Start With Devika AI

The easiest way to run the project locally:

- Install

uv– Python Package manager (https://github.com/astral-sh/uv) - Install

bun– JavaScript runtime (https://bun.sh/) - Install and setup

Ollama(https://ollama.com/)

Set the API Keys in the config.toml file. (This will soon be moving to the UI where you can set these keys from the UI itself without touching the command-line, want to implement it? See this issue: #3)

Then execute the following set of command:

ollama serve

git clone https://github.com/stitionai/devika.git

cd devika/

uv venv

uv pip install -r requirements.txt

cd ui/

bun install

bun run dev

python3 devika.pyInstallations For Devika AI

Devika requires the following things as dependencies:

- Ollama (follow the instructions here to install it: https://ollama.com/)

- Bun (follow the instructions here to install it: https://bun.sh/)

To install Devika, follow these steps:

- Clone the Devika repository:

git clone https://github.com/stitionai/devika.git2. Navigate to the project directory:

cd devika3. Install the required dependencies:

pip install -r requirements.txt

playwright install --with-deps # installs browsers in playwright (and their deps) if required4. Set up the necessary API keys and configuration (see Configuration section).

5. Start the Devika server:

python devika.py6. Compile and run the UI server:

cd ui/

bun install

bun run dev7. Access the Devika web interface by opening a browser and navigating to http://127.0.0.1:3000.

Note : This project is currently in a very early development/experimental stage. There are a lot of unimplemented/broken features at the moment. Contributions are welcome to help out with the progress!

Summary

Devika is a complex system that combines multiple AI and automation techniques to deliver an intelligent programming assistant. Key design principles include:

- Modularity: Breaking down functionality into specialized agents and services

- Flexibility: Supporting different LLMs, services and domains in a pluggable fashion

- Persistence: Storing project and agent state in a DB to enable pause/resume and auditing

- Transparency: Surfacing agent thought process and interactions to user in real-time

By understanding how the different components work together, we can extend, optimize and scale Devika to take on increasingly sophisticated software engineering tasks. The agent-based architecture provides a strong foundation to build more advanced AI capabilities in the future.