Hello Learners…

Welcome to my blog…

Table Of Contents

- Introduction

- Falcon-40B: The World’s Premier Open-Source AI Model

- What is Falcon-40B?

- Why use Falcon-40B?

- Falcon Model Uses

- Bias, Risks, and Limitations Of Falcon Model

- How to Get Started with the Falcon-40B Model

- Summary

- References

Introduction

In this post, we discuss Falcon-40B: The World’s Premier Open-Source AI Model developed by the Technology Innovation Institute.

Falcon-40B: The World’s Premier Open-Source AI Model

What is Falcon-40B?

Falcon-40B is a 40B parameters causal decoder-only model built by TII and trained on 1,000B tokens of RefinedWeb enhanced with curated corpora. It is made available under the TII Falcon LLM License.

The Falcon-7/40B pre-trained and instructed models, under the TII Falcon LLM License. Falcon-7B/40B models are state-of-the-art for their size, outperforming most other models on NLP benchmarks.

Falcon LLM is TII’s flagship series of large language models, built from scratch using a custom data pipeline and distributed training library.

The RefinedWeb dataset, a massive web dataset with stringent filtering and large-scale deduplication, enables models trained on web data alone to match or outperform models trained on curated corpora. RefinedWeb is licensed under Apache 2.0.

Decoder-Only Models

In the context of AI,Decoder-Only Models takes the encoded input and generates an output. Decoder-only models typically consist of a decoder component without an accompanying encoder. These models are commonly used in tasks such as language generation, where the goal is to generate coherent and meaningful output based on a given input or context. Decoder-only models can be based on recurrent neural networks (RNNs), transformers, or other architectures.

Why use Falcon-40B?

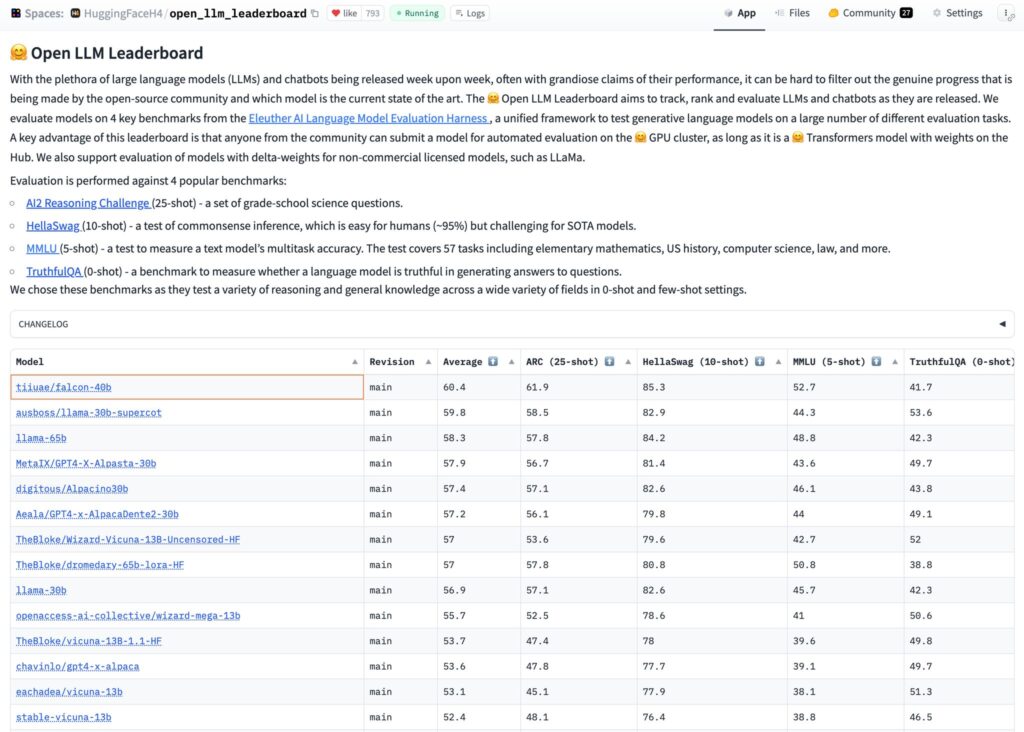

- It is the best open-source model currently available. Falcon-40B outperforms LLaMA, StableLM, RedPajama, MPT, etc. See the OpenLLM Leaderboard.

- It features an architecture optimized for inference, with FlashAttention (Dao et al., 2022) and multi-query (Shazeer et al., 2019).

- It is made available under a license allowing commercial use, see the details of the TII Falcon LLM License below.

- available in two sizes: 40B and 7B parameters

You can find more details here:

Falcon Model 40B

Falcon Model 7B

Technology Innovation Institute Hugging Face org page

The Open LLM Leaderboard

Falcon Model Uses

Direct Use

- We can use this model for the research on large language models; as a foundation for further specialization and finetuning for specific use cases (e.g., summarization, text generation, chatbot, etc.)

Out-of-Scope Use

- Production use without adequate assessment of risks and mitigation; any use cases which may be considered irresponsible or harmful.

Bias, Risks, and Limitations Of Falcon Model

Falcon-40B is trained mostly in English, German, Spanish, and French, with limited capabilities also in Italian, Portuguese, Polish, Dutch, Romanian, Czech, and Swedish. It will not generalize appropriately to other languages. Furthermore, as it is trained on a large-scale corpora representative of the web, it will carry the stereotypes and biases commonly encountered online.

How to Get Started with the Falcon-40B Model

Here is a simple example of how we can use Falcon Models.

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model = "tiiuae/falcon-40b"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

"Girafatron is obsessed with giraffes, the most glorious animal on the face of this Earth. Giraftron believes all other animals are irrelevant when compared to the glorious majesty of the giraffe.\nDaniel: Hello, Girafatron!\nGirafatron:",

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

Summary

This is a simple introduction to Falcon-40B AI Model.which is game changer in the world of open-souce AI models.It will open new ways for the researchers for the developing new high capable models.

If you want to learn more about these types of models you can also refer to the other posts,

Happy Learning And Keep Learning…

Thank You…