Hello Learners…

Welcome to the blog…

Table Of Contents

- Introduction

- How to Create Multi-User Conversational RAG Systems

- Historical Conversation Tracking:

- Context Retrieval and Answer Generation:

- Summary

- References

Introduction

In this post we try to understand How to Create Multi-User Conversational RAG Systems.

How to Create Multi-User Conversational RAG Systems

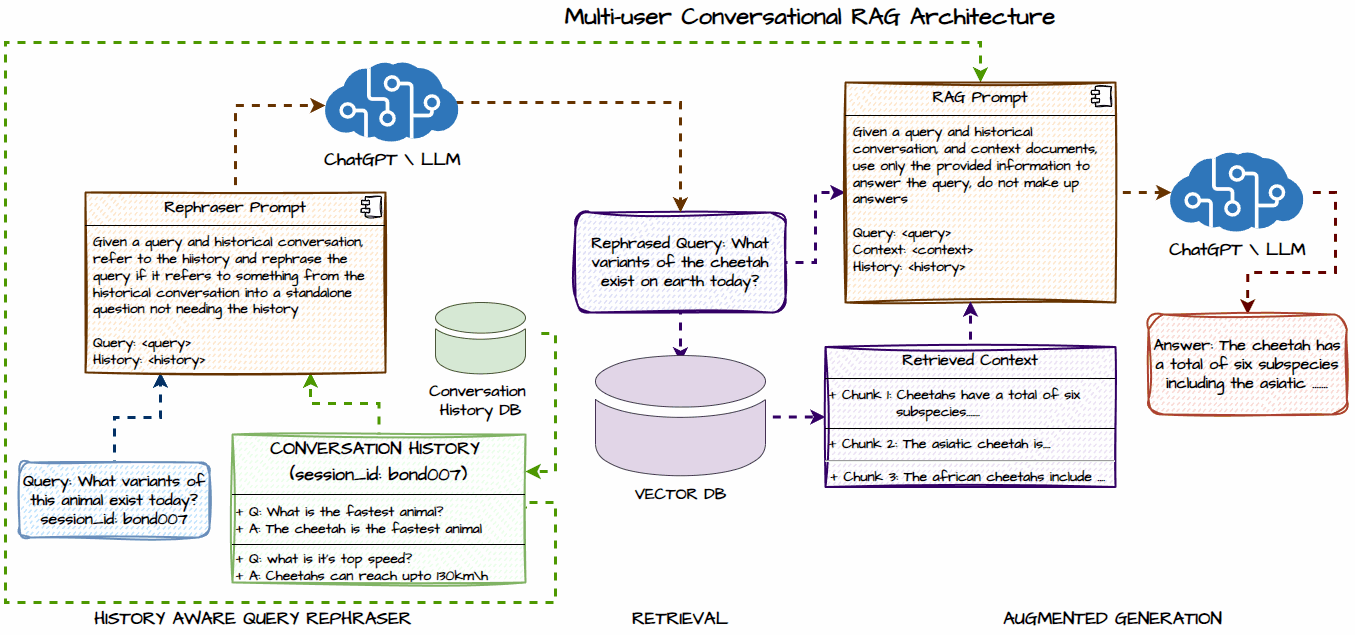

In traditional Retrieval-Augmented Generation (RAG) systems, queries need to be specific and exact. However, in most real-world chatbot interactions, users tend to converse naturally without repeating the same terms or asking highly specific questions. How can we address this challenge? Here’s an illustration of a Multi-user Conversational RAG system!

We can follow two major pipelines to implement system like this:

- Historical Conversation Tracking:

- Uses a SQL database to store historical conversations for each user session.

- Employs a smart prompt to rephrase new queries if they refer to the history, using any Large Language Model (LLM).

- Context Retrieval and Answer Generation:

- The rephrased query is sent to the Vector DB to retrieve context documents.

- The context and historical data are sent to the LLM to generate more accurate and relevant responses.

Key to Success

Managing the historical context intelligently is crucial for enhancing conversational and search experiences. It is recommended to use either file-backed or database-backed conversation persistence for effective historical context management.

Note: Is there any other way? please comment…….

Summary

Creating a multi-user conversational Retrieval-Augmented Generation (RAG) system enhances chatbot interactions by handling fluid, real-world conversations. By tracking historical conversations and intelligently rephrasing queries, the system retrieves relevant context to generate accurate responses, improving the overall user experience.