Hello Learners…

Welcome to the blog…

Table Of Contents

- Introduction

- IDEFICS: Advanced Visual Language Model

- Summary

- References

Introduction

In this post, we introduce IDEFICS: Advanced Visual Language Model. We can use it for a chat with images.

What is IDEFICS?

We are excited to release IDEFICS (Image-aware Decoder Enhanced à la Flamingo with Interleaved Cross-attentionS), an open-access visual language model. IDEFICS is based on Flamingo, a state-of-the-art visual language model initially developed by DeepMind, which has not been released publicly.

Similarly to GPT-4, the model accepts arbitrary sequences of image and text inputs and produces text outputs.

IDEFICS: Advanced Visual Language Model

IDEFICS, a multimodal model with 80 billion parameters, accepts sequences of images and texts as input, generating coherent text as output. It has the capability to answer questions about images, describe visual content, and craft narratives rooted in multiple images.

IDEFICS serves as an open-access reproduction of Flamingo, offering performance with the original closed-source model across diverse image-text understanding benchmarks. It is available in two variants, 80 billion and 9 billion parameters respectively.

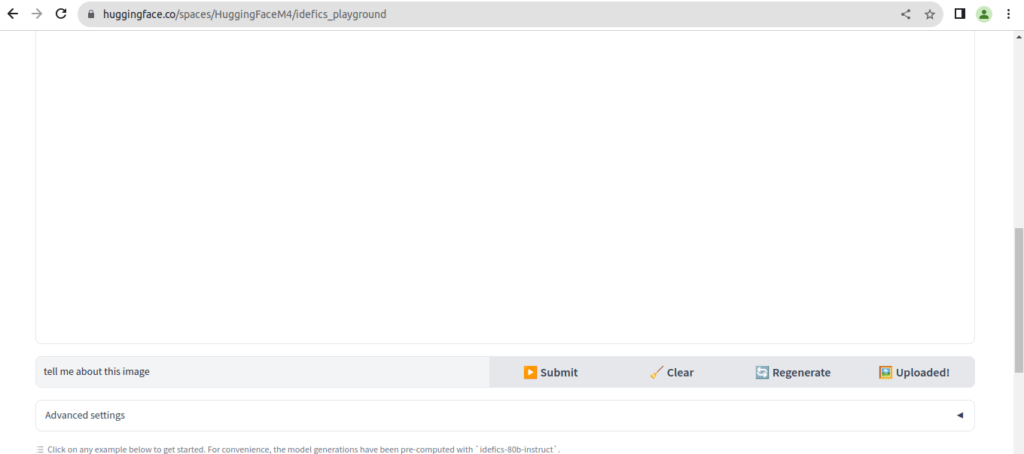

Demo: https://huggingface.co/spaces/HuggingFaceM4/idefics_playground

Here we can upload our images and ask questions related to our images.

They also provide fine-tuned versions idefics-80B-instruct and idefics-9B-instruct adapted for conversational use cases.

Training Data

IDEFICS was trained on a mixture of openly available datasets: Wikipedia, Public Multimodal Dataset, and LAION, as well as a new 115B token dataset called OBELICS that we created. OBELICS consists of 141 million interleaved image-text documents scraped from the web and contains 353 million images.

We provide an interactive visualization of OBELICS that allows exploring the content of the dataset with Nomic AI.

Getting Started with IDEFICS

IDEFICS models are available on the Hugging Face Hub and supported in the last transformers version.

Here is a code sample to try it out:

import torch

from transformers import IdeficsForVisionText2Text, AutoProcessor

device = "cuda" if torch.cuda.is_available() else "cpu"

checkpoint = "HuggingFaceM4/idefics-9b-instruct"

model = IdeficsForVisionText2Text.from_pretrained(checkpoint, torch_dtype=torch.bfloat16).to(device)

processor = AutoProcessor.from_pretrained(checkpoint)

# We feed to the model an arbitrary sequence of text strings and images. Images can be either URLs or PIL Images.

prompts = [

[

"User: What is in this image?",

"https://upload.wikimedia.org/wikipedia/commons/8/86/Id%C3%A9fix.JPG",

"<end_of_utterance>",

"\nAssistant: This picture depicts Idefix, the dog of Obelix in Asterix and Obelix. Idefix is running on the ground.<end_of_utterance>",

"\nUser:",

"https://static.wikia.nocookie.net/asterix/images/2/25/R22b.gif/revision/latest?cb=20110815073052",

"And who is that?<end_of_utterance>",

"\nAssistant:",

],

]

# --batched mode

inputs = processor(prompts, add_end_of_utterance_token=False, return_tensors="pt").to(device)

# Generation args

exit_condition = processor.tokenizer("<end_of_utterance>", add_special_tokens=False).input_ids

bad_words_ids = processor.tokenizer(["<image>", "<fake_token_around_image>"], add_special_tokens=False).input_ids

generated_ids = model.generate(**inputs, eos_token_id=exit_condition, bad_words_ids=bad_words_ids, max_length=100)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)

for i, t in enumerate(generated_text):

print(f"{i}:\n{t}\n")

Summary

IDEFICS isn’t just a model, it’s a movement toward transparency, accessibility, and shared progress. As you explore its capabilities through our interactive demo and Hub, we invite you to join us in the era of open research and collaborative innovation.

Let IDEFICS be your stepping stone toward unlocking the full potential of multimodal AI and reshaping the way we interact with technology.

Also, you can refer to this for more learning,