Hello Learners…

Welcome to the blog…

Table Of Contents

- Introduction

- What Is Vector Search Or Vector Similarity Search?

- Store Data Using Vector Embeddings And Vector Stores With LangChain And OpenAI

- Summary

- References

Introduction

This post discusses how we Store Data Using Vector Embeddings And Vector Stores With LangChain And OpenAI.

After splitting the documents into small semantically meaningful chunks.

Now it’s time to put these chunks into an index whereby we can easily retrieve them when it comes time to answer the questions about this corpus of data.

To do that we are going to utilize embeddings and vector stores.

Let’s try to understand what they are.

What Is Vector Search Or Vector Similarity Search?

Vector search, also known as vector similarity search or similarity search, is a technique used in information retrieval and data mining to find items in a dataset that are similar to a given query item, based on their vector representations. In this context, a “vector” refers to a mathematical representation of an item or data point in a multi-dimensional space.

Store Data Using Vector Embeddings And Vector Stores With LangChain And OpenAI

These are incredibly important for building chatbots over your data. And we’re going to go a bit deeper, and we’re going to talk about edge cases, and where this generic method can fail. let’s talk about vector stores and embeddings. And this comes after text splitting when we’re ready to store the documents in an easily accessible format.

They take a piece of text and create a numerical representation of that text. Text with similar content will have similar vectors in this numeric space.

What that means is we can then compare those vectors and find pieces of text that are similar. So, in the example below, we can see that the two sentences about pets are very similar, while a sentence about a pet and a sentence about a car are not very similar.

As a reminder of the full end-to-end workflow, we start with documents, we then create smaller splits of those documents, we then create embeddings of those documents, and then store all of those in a vector store.

A vector store is a database where you can easily look up similar vectors later on. This will become useful when we’re trying to find documents that are relevant to the question at hand. We can then take the question at hand, create an embedding, and then do comparisons to all the different vectors in the vector store, and then pick the n most similar.

We then take those n most similar chunks and pass them along with the question into an LLM, and get back an answer. For now, it’s time to deep dive into embeddings and vector stores themselves.

Store data using vector embeddings and vector stores with Python LangChain and OpenAI

First, we have to install the required libraries,

pip install pypdf langchain openai chromadb python-dotenv Document Loading

After installation of the required libraries we have to load the data from our source here, we are using a pdf file,

from langchain.document_loaders import PyPDFLoader

# Load PDF

loaders = [

PyPDFLoader("Natural Language Processing with Python-11-20.pdf"),

]

docs = []

for loader in loaders:

docs.extend(loader.load())Document Splitting

This converts our pdf files data into documents. Now we split that documents into small chunks.

# Split

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1500,

chunk_overlap = 150

)splits = text_splitter.split_documents(docs)

splitslen(splits)

#Output

21This is one example of the Documents which is generated after using Text_splitter.

#splits[0]

Document(page_content='Preface\nThis is a book about Natural Language Processing. By “natural language” we mean a\nlanguage \nthat is used for everyday communication by humans; languages such as Eng-\nlish, Hindi, or Portuguese. In contrast to artificial languages such as programming lan-\nguages and mathematical notations, natural languages have evolved as they pass from\ngeneration to generation, and are hard to pin down with explicit rules. We will take\nNatural Language Processing—or NLP for short—in a wide sense to cover any kind of\ncomputer manipulation of natural language. At one extreme, it could be as simple as\ncounting word frequencies to compare different writing styles. At the other extreme,\nNLP involves “understanding” complete human utterances, at least to the extent of\nbeing able to give useful responses to them.\nTechnologies based on NLP are becoming increasingly widespread. For example,\nphones and handheld computers support predictive text and handwriting recognition;\nweb search engines give access to information locked up in unstructured text; machine\ntranslation allows us to retrieve texts written in Chinese and read them in Spanish. By\nproviding more natural human-machine interfaces, and more sophisticated access to\nstored information, language processing has come to play a central role in the multi-\nlingual information society.\nThis book provides a highly accessible introduction to the field of NLP. It can be used', metadata={'source': 'Natural Language Processing with Python-11-20.pdf', 'page': 0})

Vector Store and Embeddings

Time to move on to the next section and create embeddings for all of them. We’ll use OpenAI to create these embeddings.

It’s time to create embeddings for all the chunks of the PDFs and then store them in a vector store

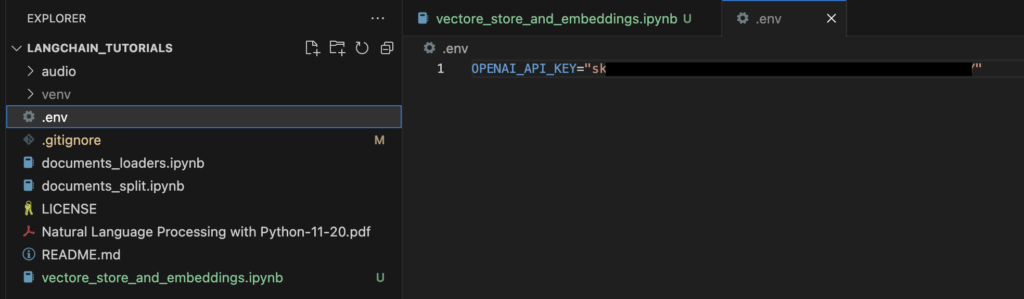

Note: To use OpenAI embedding we have to require Paid OpenAI API key.

import os

import openai

from dotenv import load_dotenv, find_dotenv

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.environ['OPENAI_API_KEY']

from langchain.embeddings.openai import OpenAIEmbeddings

embedding = OpenAIEmbeddings()The vector store that we’ll use for this lesson is Chroma. So, let’s import that. LangChain has integrations with lots and over 30 different vector stores. We choose Chroma because it’s lightweight and in memory, which makes it very easy to get up and started with

We’re going to want to save this vector store so that we can use it in future lessons. So, let’s create a variable called persist directory, which we will use later on at ./docs/chroma

Let’s also just make sure that nothing is there already.

persist_directory = 'docs/chroma/'

%rm -rf ./docs/chroma #remove if already there are a database fileLet’s now create the vector store.

from langchain.vectorstores import Chroma

vectordb = Chroma.from_documents(

documents=splits,

embedding=embedding,

persist_directory=persist_directory

)this is the open AI embedding model and then passing in persist directory, which is a Chroma-specific keyword argument that allows us to save the directory to disk

print(vectordb._collection.count())

#output

21If we take a look at the collection count after doing this, we can see that it’s 21.

Let’s think of a question that we can ask of this data.

question = "what is nlp"

docs = vectordb.similarity_search(question,k=3)

len(docs) #output=3We’re going to use the similarity search method, and we’re going to pass in the question, and then we’ll also pass in K=3. This specifies the number of documents that we want to return.

You can also play around with changing k, the number of documents that you retrieve

docs[0].page_contentvectordb.persist()This has covered the basics of semantic search and shown us that we can get pretty good results based on just embeddings alone.

You can try adjusting it to be whatever you want. You’ll probably notice that when you make it larger, you’ll retrieve more documents, but the documents towards the tail end of that may not be as relevant as the ones at the beginning

Summary

The use of vector embeddings and vector stores in data storage and retrieval represents a significant advancement in the field of data representation and management.

Flow Of The Process,

Part-1:

Part-2:

Part-3:

- This Tutorial…

Happy Learning And Keep Learning…

Thank You…