Hello Learners…

Welcome to the blog…

Title: Zephyr-7B The Powerful Language Model for Education and Research

Table of Contents

- Introduction

- Zephyr-7B The Powerful Language Model for Education and Research

- Zephyr-7B-alpha Demo By HuggingFace

- Summary

- References

Introduction

In this post, we discuss Zephyr-7B The Powerful Language Model for Education and Research.

In the ever-evolving landscape of natural language processing, language models have rapidly transformed the way we interact with technology.

These AI-driven models have become our trusted companions, helping us communicate, create, and comprehend language in more advanced and intuitive ways than ever before. And among this new generation of language models, one name stands out: Zephyr

Zephyr-7B The Powerful Language Model for Education and Research

Zephyr is a series of language models that are trained to act as helpful assistants.

Zephyr-7B-α, the first model in the series, is a fine-tuned version of mistralai/Mistral-7B-v0.1, trained on a combination of publicly available synthetic datasets using Direct Preference Optimization (DPO).

They found that removing the in-built alignment of these datasets boosted performance on MT Bench and made the model more helpful.

However, this suggests that the model is likely to generate problematic text when prompted to do so, and it should be used exclusively for educational and research purposes.

Zephyr-7B The Powerful Language Model for Education and Research

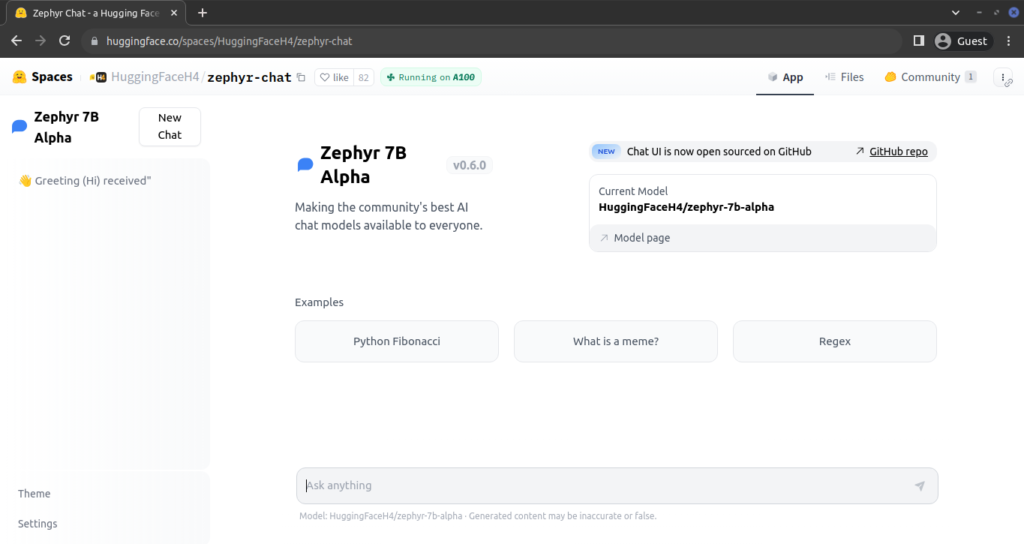

Zephyr-7B-alpha Demo By HuggingFace

Here is the live demo of Zephyr-7B-alpha Demo By HuggingFace

Demo URL: https://huggingface.co/spaces/HuggingFaceH4/zephyr-chat

Model description

- Model type: A 7B parameter GPT-like model fine-tuned on a mix of publicly available, synthetic datasets.

- Language(s) (NLP): Primarily English

- License: MIT

- Finetuned from model: mistralai/Mistral-7B-v0.1

Model Sources

- Repository: https://github.com/huggingface/alignment-handbook

- Demo: https://huggingface.co/spaces/HuggingFaceH4/zephyr-chat

Here’s how we can run the model using the pipeline() function from 🤗 Transformers:

import torch

from transformers import pipeline

pipe = pipeline("text-generation", model="HuggingFaceH4/zephyr-7b-alpha", torch_dtype=torch.bfloat16, device_map="auto")

# We use the tokenizer's chat template to format each message - see

https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{"role": "user", "content": "How many helicopters can a human eat in one sitting?"},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipe(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

Bias, Risks, and Limitations

Zephyr-7B-α hasn’t been aligned to human preferences using techniques like RLHF or deployed with in-the-loop filtering of responses like ChatGPT, so the model can potentially produce problematic outputs, especially when prompted to do so.

The size and composition of the corpus used to train the base model (mistralai/Mistral-7B-v0.1) are also unknown.

However, it is likely that it included a mix of Web data and technical sources such as books and code.

See the Falcon 180B model card for an example of this.

Training and evaluation data

Zephyr 7B Alpha achieves the following results on the evaluation set:

- Loss: 0.4605

- Rewards/chosen: -0.5053

- Rewards/rejected: -1.8752

- Rewards/accuracies: 0.7812

- Rewards/margins: 1.3699

- Logps/rejected: -327.4286

- Logps/chosen: -297.1040

- Logits/rejected: -2.7153

- Logits/chosen: -2.7447

Framework versions

- Transformers 4.34.0

- Pytorch 2.0.1+cu118

- Datasets 2.12.0

- Tokenizers 0.14.0

Summary

In the world of language models, Zephyr-7B-α represents a remarkable leap forward in technology and assistance, promising to redefine the way we work with language. Its journey is just beginning, and the potential applications are boundless.

Happy Learning And Keep Learning…

Thank You…

I spent over three hours reading the internet today, and I haven’t come across any more compelling articles than yours. I think it’s more than worth it. I believe that the internet would be much more helpful than it is now if all bloggers and website proprietors produced stuff as excellent as you did.